Microsoft and IDUN Podcast

Microsoft and IDUN Podcast In an intriguing podcast episode, Mark from IDUN Technologies sat down with the renowned Ivan Tashev…

Our brain-sensing earbuds are integrated with a full-stack, cloud-based solution where data is analyzed and insights can be generated. We’re at the forefront of the next generation of wearables!

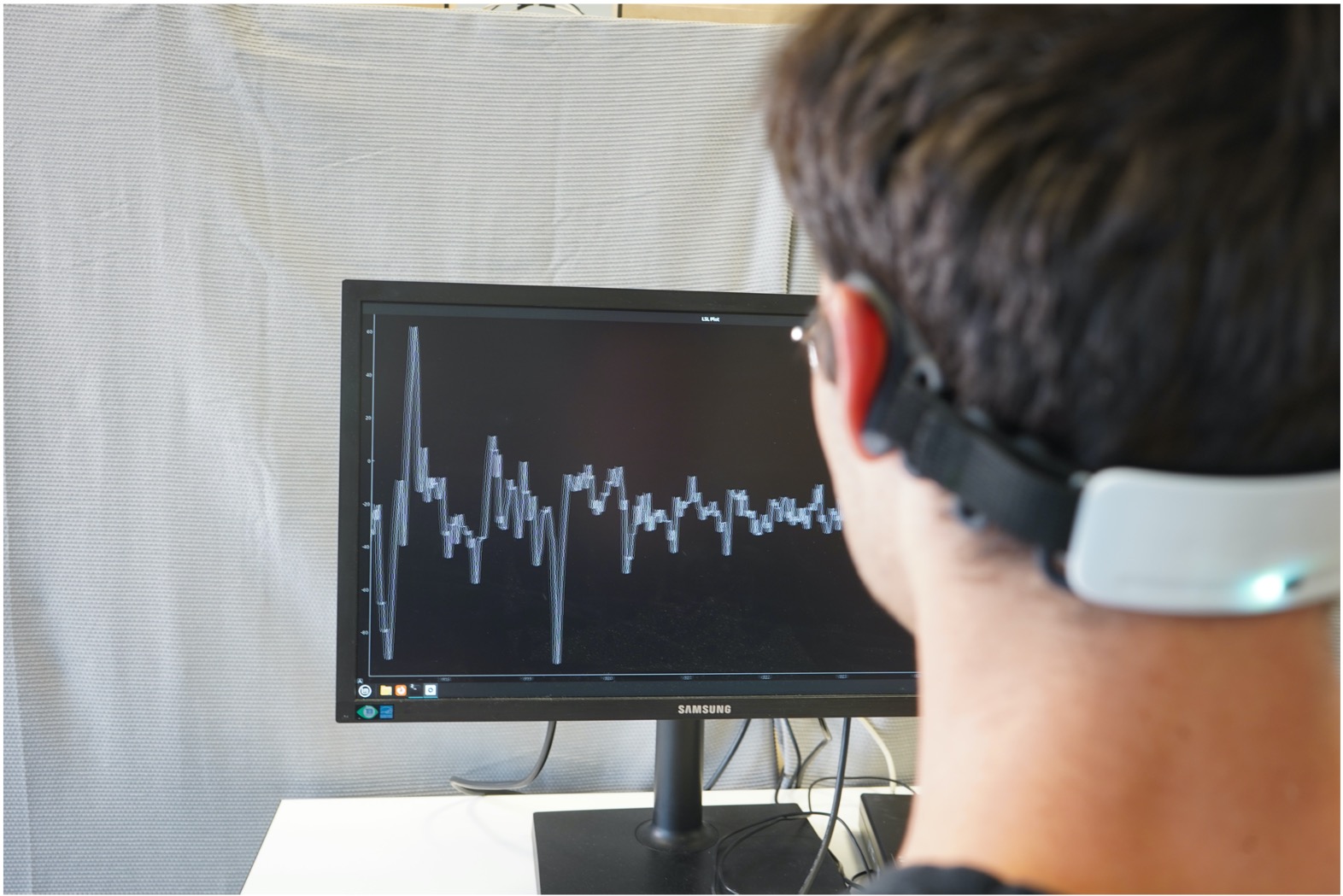

The IDUN Guardian is backed by the latest scientific breakthroughs in EEG technology. Learn more about how brain activity can be leveraged to enhance everyday life.

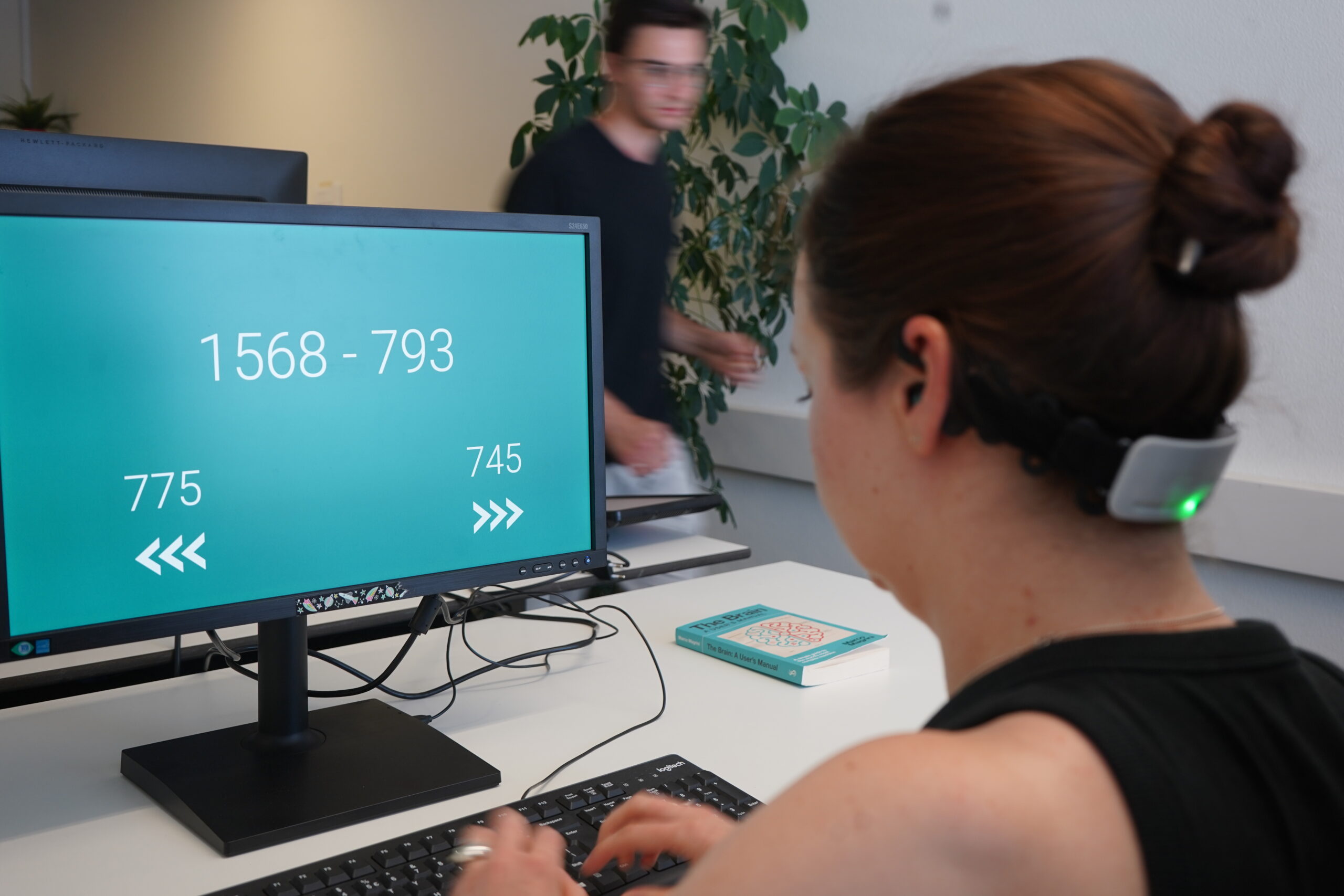

In-ear EEG has the potential to use brain activity, such as eye movements, as input control for interactive applications.

Continuous monitoring of cognitive states in real-world settings is possible with in-ear EEG. These valuable insights into human cognition have applications across various domains, including high-stakes professions, education, and personalized learning environments.

Microsoft and IDUN Podcast In an intriguing podcast episode, Mark from IDUN Technologies sat down with the renowned Ivan Tashev…

Exploring the Cutting-Edge Developments in BCI: Insights from the 10th International BCI Meeting Our CTO, Moritz Thielen, attended the 10th…

IDTechEx and IDUN Podcast In this episode of the IDUN Technologies podcast series, Tess Skyrme, a Technology Analyst from IDTechEx,…